EtherSAM: The New Standard in Ethernet Service Testing—Part 1

As Ethernet continues to evolve as the transport technology of choice, networks have shifted their focus from purely moving data to providing entertainment and new applications in the interconnected world. Ethernet-based services such as mobile backhaul, business and wholesale services need to carry a variety of applications, namely voice, video, e-mail, online trading and others. These new applications impose new requirements on network performance and on the methodologies used to validate the performance of these Ethernet services.

This article presents EtherSAM or ITU-T Y.1564, the new ITU-T draft standard for turning up, installing and troubleshooting Ethernet-based services. EtherSAM is the only standard test methodology that allows for complete validation of Ethernet service-level agreements (SLAs) in a single, significantly faster test and with the highest level of accuracy.

The Reality of Today’s Networks

Ethernet networks are now servicing real-time and sensitive services. By service, we are referring to the various types of traffic that the network can carry. Generally, all network traffic can be classified under three traffic types: data, real-time and high-priority. Each traffic type is affected differently by the network characteristics and must be groomed and shaped to meet their minimum performance objectives.

|

Traffic Type |

Main Applications |

Examples of Services |

|

Data |

Non-real-time or data transport |

|

|

Real-time |

Real-time broadcast that cannot be recreated once lost |

|

|

High-priority |

Mandatory traffic used to maintain stability in the network |

|

To assure quality of service (QoS), providers need to properly configure their networks to define how the traffic will be prioritized in the network. This is accomplished by assigning different levels of priority to each type of service and accurately configuring network prioritization algorithms. QoS enforcement refers to the method used to differentiate the traffic of various services via specific fields in the frames, thus providing better service to some frames over other ones. These fields allow a network element to discriminate and service high- and low-priority traffic.

Importance of the SLA

The service-level agreement (SLA) is a binding contract between a service provider and a customer, which guarantees the minimum performance that will be assured for the services provided. These SLAs specify the key forwarding characteristics and the minimum performance guaranteed for each characteristic.

Typical SLA Example

|

Traffic Type |

Real-Time Data |

High-Priority Data |

Best-Effort Data |

|

CIR (Mbit/s) (green traffic) |

5 |

10 |

2.5 |

|

EIR (Mbit/s) (yellow traffic) |

0 |

5 |

5 |

|

Frame delay (ms) |

< 5 |

5-15 |

<30 |

|

Frame delay variation (ms) |

< 1 |

n/a |

n/a |

|

Frame loss (%) |

< 0.001 |

<0.05 |

<0.05 |

|

VLAN |

100 |

200 |

300 |

Customer traffic is classified into three traffic classes, and each is assigned a specific color: green for committed traffic, yellow for excess traffic and red for discarded traffic.

- Committed information rate (CIR) (green traffic): refers to bandwidth that is guaranteed available at all times to a specific service; for green traffic, minimum performance objectives (i.e., key performance indicators or KPIs) are guaranteed to be met.

- Excess information rate (EIR) (yellow traffic): refers to excess bandwidth above CIR that may be available depending on network loading and usage; for yellow traffic, minimum performance objectives are not guaranteed to be met.

- Discarded traffic (red traffic): refers to traffic that is above the CIR or the CIR/EIR rate and that cannot be forwarded without disrupting other services; red traffic is therefore destroyed.

| Green traffic | 0 to CIR | Guaranteed forwarding | KPIs are guaranteed |

| Yellow traffic | CIR to EIR | Best effort | KPIs are not guaranteed |

| Red traffic | > EIR or CIR | Discarded traffic | Not applicable |

Key Performance Indicators

Key performance indicators (KPIs) are specific traffic characteristics that indicate the minimum performance of a particular traffic profile.

Under green traffic conditions, the network must guarantee that these minimum performance requirements are met for all forwarded traffic. Typical KPIs include:

Bandwidth

Bandwidth refers to the maximum amount of data that can be forwarded. This measurement is a ratio of the total amount of traffic forwarded during a measurement window of one second. Bandwidth can either be “committed” or “excess” with different performance guarantees.

Bandwidth must be controlled, as multiple services typically share a link. Therefore, each service must be limited in order not to affect another service. Generating traffic over the bandwidth limit usually leads to frame buffering, congestion and frame loss or service outages.

Frame Delay (Latency)

Frame delay, or latency, is a measurement of the time delay between a packet’s transmission and its reception. Typically, this is a round-trip measurement, meaning that it simultaneously calculates both the near-end to far-end and the far-end to near-end directions. This measurement is critical for voice applications, as too much latency can affect call quality, leading to the perception of echoes, incoherent conversations or even dropped calls.

Frame Loss

Frame loss can occur for numerous reasons such as transmission errors or network congestion. Errors due to a physical phenomenon can occur during frame transmission, resulting in frames being discarded by networking devices such as switches and routers, based on the frame check sequence field comparison. Network congestion also causes frames to be discarded, as networking devices must drop frames in order not to saturate a link in congestion conditions.

Frame Delay Variation (Packet Jitter)

Frame delay variation, or packet jitter, refers to the variability in arrival time between packet deliveries. As packets travel through a network, they are often queued and sent in bursts to the next hop. Random prioritization may occur, resulting in packet transmission at random rates. Packets are therefore received at irregular intervals. This jitter translates into stress on the receiving buffers of the end nodes, where buffers can be overused or underused when there are large swings of jitter.

Real-time applications such as voice and video are especially sensitive to packet jitter. Buffers are designed to store a certain quantity of video or voice packets, which are then processed at regular intervals to provide a smooth and error-free transmission to the end user. Too much jitter will affect the quality of experience (QoE) because packets arriving at a fast rate will cause the buffers to overfill, resulting in packet loss; packets arriving at a slow rate will cause buffers to empty, leading to still images or sound.

|

Traffic Type |

Data |

Real-Time Traffic |

High-Priority Traffic |

|

Bandwidth |

Very sensitive |

Sensitive |

Sensitive |

|

Frame loss |

Very sensitive |

Very sensitive |

Very sensitive |

|

Frame delay |

Sensitive |

Sensitive |

Sensitive |

|

Frame delay variation |

Not sensitive |

Very sensitive |

Not sensitive |

Current Testing Methodology: RFC 2544

RFC 2544 has been the most widely used Ethernet service testing methodology. This series of subtests provides a methodology to measure throughput, round-trip latency, burst and frame loss.

It was initially introduced as a benchmarking methodology for network interconnect devices in the lab. However, since RFC 2544 was able to measure throughput, burstability, frame loss and latency, and because it was the only existing standardized methodology, it was also used for Ethernet service testing in the field.

While this methodology provides key parameters to qualify the network, it is no longer sufficient to fully validate today’s Ethernet services. More specifically, RFC 2544 does not include all required measurements such as packet jitter, QoS measurement and multiple concurrent service levels. Additionally, since RFC 2544 requires the performance of multiple, sequential tests to validate complete SLAs, this test method takes several hours, proving to be both time-consuming and costly for operators. There is now a requirement to simulate all types of services that will run on the network and simultaneously qualify all key SLA parameters for each of these services.

Revolutionary Testing Methodology: EtherSAM (ITU-T Y.1564)

To resolve issues with existing methodologies, the ITU-T has introduced a new test standard: the ITU-T Y.1564 methodology, which is aligned with the requirements of today’s Ethernet services. EXFO is the first to implement EtherSAM—the Ethernet service testing methodology based on this new standard—into its Ethernet testing products.

EtherSAM enables complete validation of all SLA parameters in a single test to ensure optimized QoS. Contrary to other methodologies, it supports new multiservice offerings. In fact, EtherSAM can simulate all types of services that will run on the network and simultaneously qualify all key SLA parameters for each of these services. It also validates the QoS mechanisms provisioned in the network to prioritize the different service types, resulting in more accurate validation and much faster deployment and troubleshooting. Moreover, EtherSAM offers additional capabilities such as bidirectional measurements.

EtherSAM (Y.1564) is based on the principle that the majority of service issues are found in two distinct categories: a) in the configuration of the network elements that carry the service or b) in the performance of the network during high load conditions when multiple services cause congestion.

Network Configuration

Forwarding devices such as switches, routers, bridges and network interface units are the basis of any network as they interconnect segments. These forwarding devices must be properly configured to ensure that traffic is adequately groomed and forwarded according to their service level.

If a service is not correctly configured on a single device within the end-to-end path, network performance can be greatly affected as services may not be properly implemented. This may lead to service outage and network-wide issues such as congestion and link failures. Therefore, a very important part of the testing effort is to ensure that devices are properly configured to handle the network traffic as intended.

Service Performance

Service performance refers to the ability of the network to carry multiple services at their maximum guaranteed rate without any degradation in performance; i.e., KPIs must remain within an acceptable range

As network devices come under load, they must make quality decisions, prioritizing one traffic flow over another to meet the KPIs set for each traffic class. With only one traffic class, there is no prioritization performed by the network devices since there is only one set of KPIs. As the amount of traffic flow increases, prioritization is necessary and performance failures may occur.

Service performance assessment must be conducted over a medium- to long-term period as problems typically occur in the long term and will probably not be seen with short-term testing.

The focus of EtherSAM (Y.1564) is therefore threefold:

- First, the methodology serves as a validation tool, making sure that the network complies with the SLA by ensuring that a service meets its KPI performance objectives at different rates, within the committed range.

- Second, the methodology ensures that all services carried by the network meet their KPI performance objectives at their maximum committed rate, proving that under maximum load, the network devices and paths are able to service all the traffic as designed.

- Third, service testing can be performed for a medium to long test period, confirming that network elements can properly carry all services while under stress during a soaking period.

EtherSAM: Tests and Subtests

EtherSAM is comprised of two tests: the network configuration test and the service test.

Network Configuration Test

The network configuration test is a per-service test that verifies the bandwidth and performance requirements of a specific service as defined by the user. The process follows three key phases and monitors all performance indicators during these steps, ensuring that they are all met at the same time.

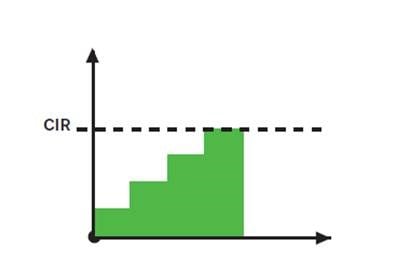

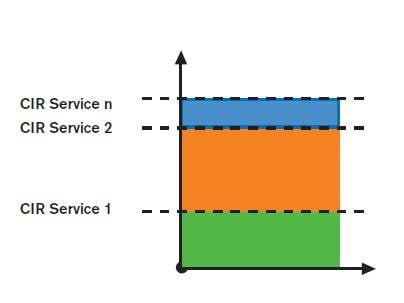

Phase 1: Minimum Data Rate to CIR

In this phase, bandwidth for a specific service is ramped up from a minimum data rate to the committed information rate (CIR). It ensures that the network is able to support this specific service at different data rates while maintaining the performance levels. It also provides a safe and effective way to ramp up utilization without overloading a network in case the service is not configured correctly.

As the service is ramping up to the CIR gradually, the system automatically measures KPIs at each step to ensure that the minimum performance objectives are always met. If any performance objective fails, the phase also fails. For this phase to pass, all performance objectives must be met during the ramp-up and at in terms of CIR.

- As service is ramped up, KPIs are measured (Rx throughput, frame loss, frame delay, frame delay variation)

Pass/fail criteria

| Pass |

|

| Fail |

|

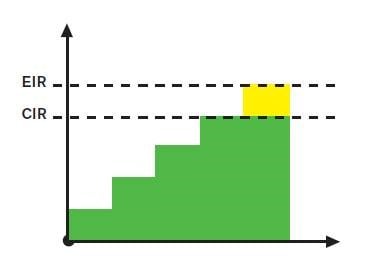

Phase 2: CIR to EIR

In this phase, the service is ramped up from the CIR to the excess information rate (EIR). It ensures that the service’s EIR is correctly configured and that the rate can be attained. However, as per accepted principles, performance is not guaranteed in the EIR rates; therefore no KPI assessment is performed.

At this stage, the system only monitors the received throughput. Since EIR is not guaranteed, bandwidth may not be available for all traffic above the CIR. A pass condition corresponds to the CIR as the minimum received rate and the EIR as a possible maximum. Any receive rate below the CIR is considered as failed.

- Service is tested at the EIR. Since this represents yellow traffic (no performance guarantee), KPIs are not assessed. A pass/fail condition is only established for the received throughput. Although the EIR is not guaranteed, the CIR should represent the minimum throughput measured.

Pass/fail criteria:

| Pass |

|

| Fail |

|

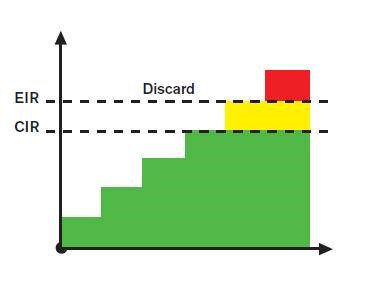

Phase 3: Overshoot Testing

One of the attributes of packet transport is the capability to handle bursty traffic. In conditions of burst, overshoot or conditions that surpass the available bandwidth, EIR can occur, which usually leads to discarded traffic.

In this step, traffic is sent above the EIR and the received rate is monitored. At the very least, the CIR should be forwarded. The EIR traffic should be forwarded depending on the availability of resources. Any traffic above this maximum should be discarded not to overload the network. If the amount of traffic received exceeds the EIR, it means that a device is not properly configured and a fail condition is declared.

- Service is tested above the EIR. This corresponds to the red traffic, and discarding is expected. Pass/fail is performed on the bandwidth, ensuring that the service is properly rate-limited.

- Any received throughput exceeding the EIR indicates that the service is not properly configured.

Pass/fail criteria:

| Pass |

|

| Fail |

|

These three phases are performed per service; therefore if multiple services exist on the network, each service should be tested sequentially. This ensures that there is no interference from other streams and specifically measures the bandwidth and performance of the service alone.

At the end of the Ethernet network configuration test, the user has a clear assessment of whether the network elements and path have been properly configured to forward the services while meeting the minimum KPI performance objectives.

Service Test

While the network configuration test concentrates on the proper configuration of each service in the network elements, the service test focuses on the enforcement of the QoS parameters under committed conditions, replicating real-life services.

In this test, all configured services are generated at the same time and at the same CIR for a soaking period that can range from a few minutes to days. During this period, the performance of each service is individually monitored. If any service fails to meet its performance parameters, a fail condition is declared.

- All configured services are generated simultaneously at their maximum rate with performance guaranteed (CIR).

- All KPIs are monitored. If any service experiences a KPI below the minimum performance objective, a fail condition is declared.

Pass/fail criteria:

| Pass |

|

| Fail |

|

The combination of these two tests provides all the critical results in a simple and complete test methodology. It quickly identifies configuration faults via the network configuration tools by focusing on each service and how they are handled by the network elements along paths. This test then focuses on the network capacity to handle and guarantee all services simultaneously. Once both phases are passed, the circuit is ready to be activated and put in service.

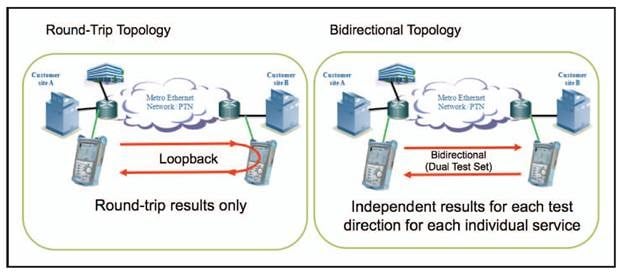

EtherSAM Test Topologies: Loopback and Bidirectional (Dual Test Set)

EtherSAM can also execute round-trip measurements with a loopback device. In this case, the results reflect the average of both test directions, from the test set to the loopback point and back to the test set. In this scenario, the loopback functionality can be performed by another test instrument in Loopback mode or by a network interface device in Loopback mode.

The same test can also be run in Dual Test-Set mode. In this case, two test sets, one designated as local and the other as remote, are used to communicate and independently run tests per direction. This provides much more precise test results such as independent assessment per direction and the ability to quickly determine which direction of the link is experiencing failure.