64G Fibre Channel in the data center: top 5 questions answered!

64G Fibre Channel in the data center: top 5 questions answered!

Why is 64G Fibre Channel the answer to today’s data center storage challenges? How does this protocol work and how is 64G validated in lab or field settings? Two of EXFO’s fiber optic experts—Anabel Alarcon (AA), Product Manager-Manufacturing, Design and Research solutions and David J. Rodgers (DJR), Business Development Manager at EXFO and board member of the Fibre Channel Industry Association (FCIA)—answer these and related questions about the Fibre Channel protocol and 64G.

What is Fibre Channel and why does this protocol matter for data centers and interconnectivity?

Fibre Channel is a secure, high speed data transfer protocol primarily used for accessing storage area networks (SANs) inside data centers. The Fibre Channel protocol was conceived and first defined in 1988. Obviously, it’s evolved over the years and today it's managed by the T11 workgroup of the InterNational Committee for Information Technology Standards (INCITS) and evangelized by the Fibre Channel Industry Association (FCIA).

What are the main features of Fibre Channel?

Fibre Channel is an exceptionally robust and proven protocol supporting high bandwidth rates. It’s purpose-built for the secure storage application space and its hallmarks include scalability, low latency and congestion-free communication links.

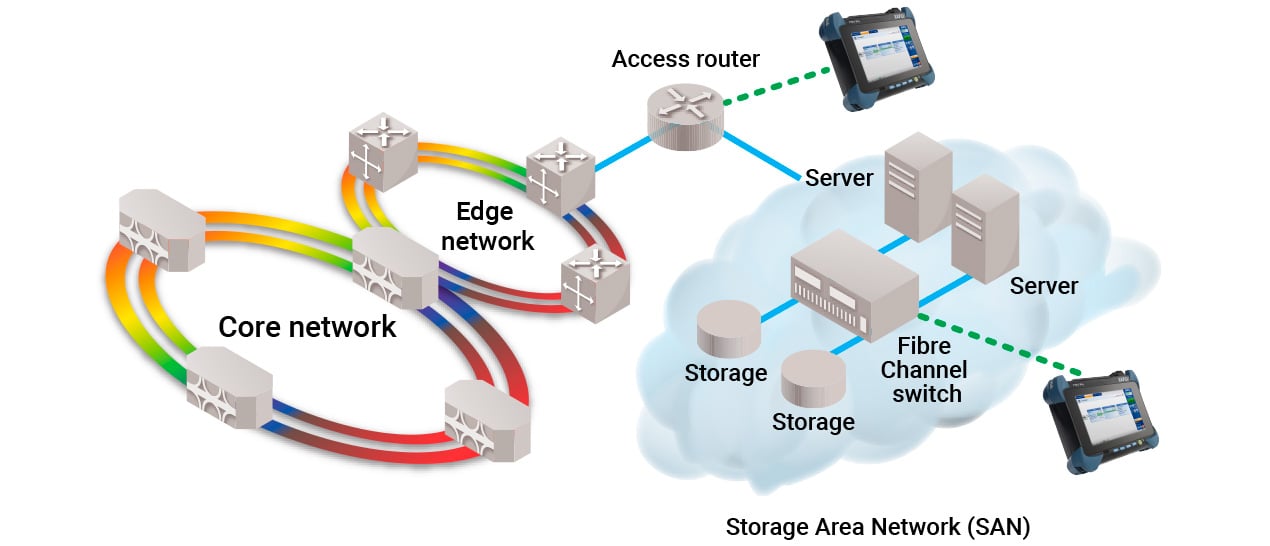

Figure 1. Fibre Channel within a network architecture.

Fibre Channel, as defined for the storage environment, was predicated on the idea users want to access the storage array cleanly and quickly, without latency—and as securely as possible. It plays nicely in the 64G ecosystem, enabling interoperability among vendors and with legacy technologies. This means when we deploy new, high-speed technologies into a data center or storage facility, legacy support is guaranteed.

Security is also a major aspect of Fibre Channel’s usefulness; one doesn’t hear of anybody talking about a breach to their Fibre Channel storage fabric. It's defined and developed to be a robust, secure, low latency, scalable and highly reliable storage environment.

Over the years, the governing bodies (e.g., T11 workgroup) for Fibre Channel have worked diligently to ensure that both the physical structure of this protocol, and other protocols that sit atop it in storage array environments, are well-thought-out and well-tested.

Because of its security and reliability, Fibre Channel is used for high-stakes applications. For example, some of the new airframes that are coming off the production lines at Lockheed and Boeing use Fibre Channel in their fly-by-wire controls. It’s also used by financial institutions for secure archival storage.

Fibre Channel is scalable, congestion-free, highly efficient and secure. That’s why this protocol has stayed on the market and is still evolving.

What are the main benefits of Fibre Channel for data centers and data center interconnectivity?

It can’t be overstated, interoperability and security are the key benefits, and these attributes are why Fibre Channel is used by financial institutions and the U.S. government. Data needs to be secure, but easy to access quickly—Fibre Channel makes that possible.

When you're buying equipment for your data center—such as switches, host bus adapters or upgraded modules—and you choose a product that uses Fibre Channel, you know it has been well tested and will work straight out of the box.

Interoperability also plays a big role. PCI Express (PCIe) is the basic structure for communication within the server and its subcomponents. The NIC or HBA is hosted by the PCIe and handles the Ethernet and Fibre Channel interfaces for supported applications (i.e., storage arrays, USB devices, video, etc.). Everything in this ecosystem must interoperate.

In terms of upper-level protocols, Fibre Channel over Ethernet was a big deal a few years ago. Fibre Channel information was encapsulated and transported via Ethernet. Because this protocol ensured this data was packaged and contained properly, it could be quickly offloaded and provided to the target or host when it reached its destination.

These technologies don't work all by themselves. The following is an example from the world of financial trading. If you go into a branch of a financial services company or brokerage house and talk with an agent, that person will be working on a terminal that's connected to a network via Ethernet. As soon as a trade is executed, it goes to the router, which then sends it to the secure database and the secure Fibre Channel storage area to execute the trade.

Figure 2. Financial services sector.

How has Fibre Channel kept pace with increasing data rates?

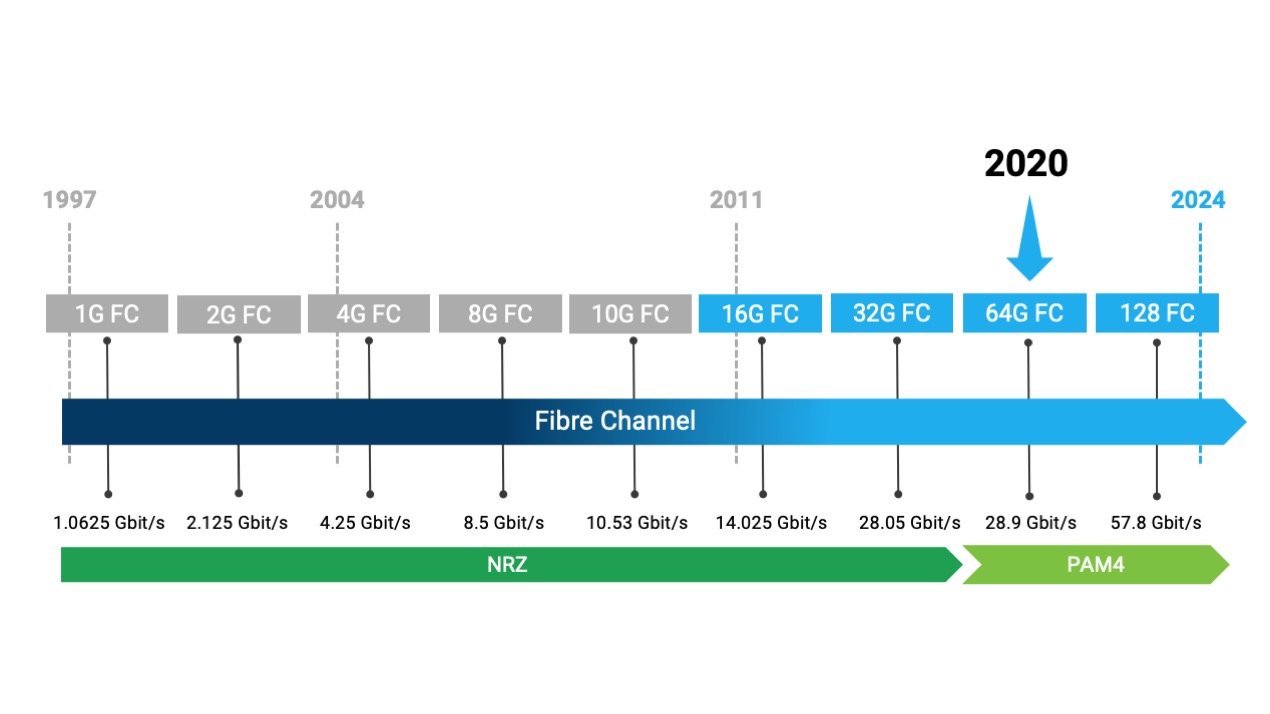

As mentioned earlier, Fibre Channel was first defined in 1988. Over the years, it has evolved to support higher and higher data rates. This progressed alongside Ethernet rates, moving from 1G in 1997 then incrementally from 2G, 4G, 8G through to 10G. In 2011, Fibre Channel rates jumped to 16G, followed very quickly to 32G. As other types of modulation came along, like PAM-4, 64G entered the Fibre Channel scene. PAM-4 signaling is becoming the de facto standard for achieving higher data rates, both for many signaling schemes including PCIe, Ethernet and Fibre Channel. The next expected jump will be to 128G, which could happen as soon as 2024.

Figure 3. The evolution of Fibre Channel.

Why is 64G Fibre Channel the answer to today's storage challenges?

One key reason is the way storage media in data centers is evolving.

The highest speed in traditional rotating media is 24G. You probably don’t need a 64G Fibre Channel communications link to talk to 24G-rated SAS drives. However, the flash storage market space has exploded. Now we have flash arrays that can handle much faster gigabit transfers of information. If a data center has equally fast access on the Ethernet side—which is where the consumer interacts with the information—it’s necessary to access data at these speeds on the other side, if it’s in a secure Fibre Channel fabric.

In other words, to maintain equilibrium in a data center’s storage space, throughput needs to be equivalent. To use a traffic analogy: as you travel along a roadway, and you come to a congested area, it doesn’t matter if the speed limit is 100 km/h; if traffic is congested ahead then everything comes to a screeching halt. This means all the players in the ecosystem and the compute space need to be at equivalent rates to achieve user satisfaction. If the expected rate is 64G, that’s the rate at which consumers need to access the flash media so that we can compute equivalently across all the different connections. The option to have this speed and not use it is better than needing the speed and not having it.

All this relates to the way access speeds, and consumer expectations, have evolved over the years.

When cell phones became super popular—with the arrival of 3G service for voice conversations and text messaging—data transmission speed didn’t need to be faster than 3 Gbit/s. Today, 5G requires 25 Gbit/s Ethernet backhaul because users want content that much faster. Plus, cell phones are no longer just a communications device; they are an education and entertainment tool. This change in content consumption is driving the requirements for speed in our market space.

Application and consumer use is also influencing how data centers are designed and operated, and the technologies involved. New technologies (e.g., Ethernet drives) are being discussed. As these new technologies are introduced, they will enter an ecosystem that already involves other technologies for secure storage like Fibre Channel hard drive and tape drives. Think about what this means for interoperability. Let’s say you pull out a KVM tray in your datacenter to do some equipment administration. It’s got USB ports on it. It’s got an SAS interface. It’s got a connection to these other serial protocols, and they all must work together, making interoperability an important check-list item to the 64G Fibre Channel story.

Want to learn more? Check out these 64G Fibre Channel resources:

64G Fibre Channel Webinar – Part 1 : Unleashing the power of 64G Fibre Channel

64G Fibre Channel Webinar – Part 2 : Overcoming testing challenges in the Fibre Channel ecosystem