How did cloud services become such a hot topic?

Cloud computing has been around for a long time. Enterprise IT departments have been using data centers with success for decades. The novelty of cloud computing comes with the “sharing aspect” of data centers. Data centers are now being used by multiple users with ubiquitous public access to the Internet. By pooling resources, cloud service providers can offer dynamic services tailored to users’ requirements.

Cloud computing has three enablers, as follows:

- Web browser: Due to the fact that web browsers have a common delivery platform that is independent of different operating systems (OS), applications can be created very rapidly and efficiently.

- Virtualization: Is synonymous with more automation, separating hardware from software, increased agility, simplified design, policy-based management, network management bonded to broader IT workflow systems, etc. This will bring about a lot of change in terms of processes and interaction between humans and systems, among themselves and among each another.

- Broadband access: By centralizing and pooling resources such as servers, storage and applications, cloud providers have the capability to deliver to a large group of users; the only major issue involves the transfer of information to and from the user to the data center.

The basis of all cloud services is connectivity, which can be divided into two distinct subgroups, as follows:

- Transport Connectivity: Allows cloud users to connect to content in data centers. Keep in mind that transport connectivity can be delivered through fixed wireline connections or through mobile connections. Accordingly, the quality of these connections forms the foundation of the cloud user’s quality of experience. In order to deliver cloud transport connectivity, cloud carriers need high-performance services with multiple classes of service and high availability.

- Application Connectivity: Represents the connectivity between cloud content. This connectivity provides the ability to move data between computing resources across different data centers. With cloud application connectivity, services must be high performance and available at all times.

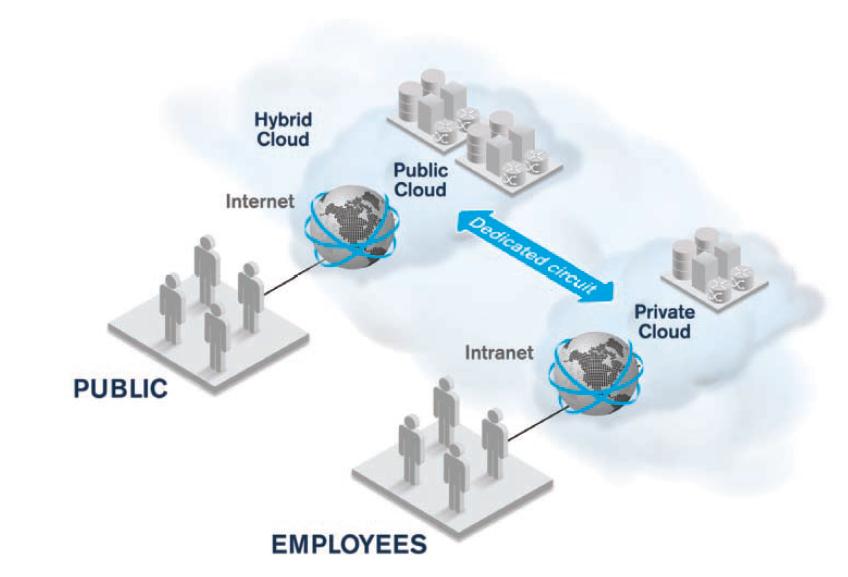

There are three models of cloud deployment:

- The public cloud model is the most common and ubiquitous type of deployment model. Via the Internet, cloud users can connect to applications such as Netflix and Amazon.com to access their services. This model is ideal for the general public, because it provides connectivity to the application at a very low cost.

- The private cloud model is the favorite of enterprise companies. Because this model is completely independent of the Internet, it is more secure and robust than the public model. A typical example of a private cloud model is a retail store that is connected to a centralized system providing centralized inventory management and cash register applications.

- The hybrid model offers the best of both worlds. A VPN connection is established to enable cloud traffic to flow between the private and public cloud.

Now that we have covered the different types of connectivity and models of cloud deployment, we will now assess the cloud network architecture requirements needed to deliver cloud services. These requirements can be grouped into the following four categories:

- Service-level specifications (SLS) are the technical specifications associated with the SLA, and define the characteristics of the standard transport connectivity end-to-end services. Low frame loss and high availability are base requirements for any high-priority service. When it comes to low latency, the impact is two-fold. The first side effect of latency is perceived quality of experience. Corporate users expect applications to respond rapidly, and will not lower their expectations if corporate applications are moved to the cloud. The perceived quality of experience (QoE) can only be maintained if the transport connectivity service is able to deliver traffic with minimum latency. Latency also affects the capacity of a transport connectivity service in delivering high-bandwidth applications. Because cloud applications are generally delivered over the TCP/IP protocol stack, transport connectivity attributes such as latency and frame loss can affect the application throughput.

- Quality of service (QoS) involves three distinct considerations: the capacity of a carrier to deliver multiple classes of service, the carrier’s ability to access data inside the packet being transported and assign it to the appropriate grade of service, and its ability to ensure that QoS is measured from end to end. Although service attributes are the building blocks of transport connectivity, QoS is the most important component of cloud deployment.

- On-demand or self-service functionalities will need to be further developed by carriers moving forward, and will also need to span more than a single operator network. In addition, standards development organizations (SDOs) will need to bring standardized dynamic provisioning to the market. There are dynamic provisioning implementations in production today, but in order for carriers to be successful in the delivery of transport connectivity, they will need the help of SDOs.

- Reliability is essential, because mission-critical applications are moving to the cloud and users need constant connectivity to their applications. For this reason, Carrier Ethernet networks need to implement resiliency so that outages are kept to a minimum.

Communication service providers (CSPs) or Carriers understand that they will need to provide more value-added services in order to increase their top-line profitability. There are a lot of different value-added services that could be added to the product portfolio of carriers, but as network and cloud access services are the basis of the quality equation, when it comes to cloud services, the evolution from CSP to cloud service provider is trivial.

Traditional telecom service providers are already trying to increase their top line by offering more managed services. However, these efforts must evolve to account for the fact that content and applications are what people value and are willing to pay for. Service providers need to find ways to pair their high-performance networks with cloud and content providers in ways that allow them to participate in current revenue streams. Doing so will require changes in terms of how they create, activate and assure services that let them interact with the cloud and content providers. They also need to increase their focused to the end service or application the user is buying.

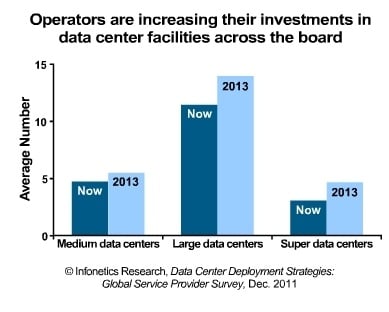

In a recent report, Infonetics analysts interviewed 19 major telecom operators, cable operators, data center/content specialists and colocation providers with data centers containing 100 or more servers. Together, the respondent operators represent a significant 20% of the world’s telecom carrier revenue and 20% of the world’s telecom CAPEX, and are distributed across North America, EMEA (Europe, Middle East, Africa), and Asia Pacific.

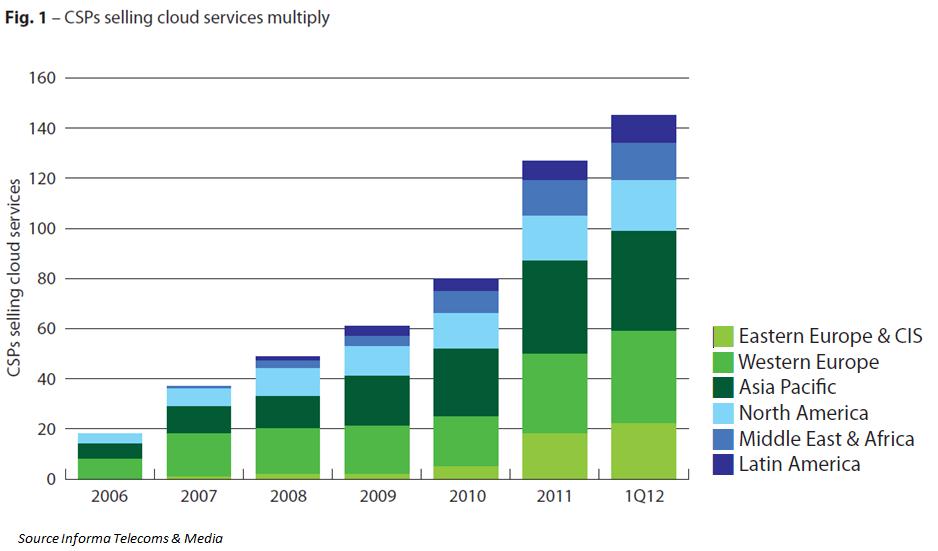

According to Informa, more and more carriers are turning to cloud services for additional revenues. CSPs need to remember that cloud computing can and must defend their core broadband network. Cloud services must fulfill a primary function of securing additional revenues on the network assets that make carriers positively different from pure-play cloud-service providers.

Challenges

When it comes to cloud challenges, QoE is at the top. Cloud users and cloud consumers (in this case defined as an organization or person that buys services from the cloud provider to use or run an application) are on the receiving end of QoE. Cloud providers are responsible for the cloud data center and transport connectivity. This responsibility translates into an SLA between the cloud provider and cloud consumer. The cloud provider does not own the carrier network, but leases transport connectivity from the cloud carrier with an attached SLA.

As mentioned earlier, cloud carriers are responsible for availability, reachability, bandwidth and service attributes, such as frame loss, latency and packet jitter.

Cloud providers need to take a closer look into the data center infrastructure. Virtual machine availability, server utilization and allocation, and storage capacity are all within the responsibilities.

As they connect the cloud users to the data center, they both need to ensure that the services are available to the users, that the Web response time is within the SLA, and that DNS service is accurate and performs as expected.

The most important shared responsibility is the time it takes to turn up a customer. Because most of the time required to turn-up a customer originates from activation of the cloud access service, carriers will need to streamline their operations to bring turn-up time down to a reasonable amount.

Once the customer order for the cloud access service has been received, the carrier will start the lifecycle of the service. The three major stages to the life cycle are:

- Provisioning and turn-up

- Performance management

- Fault management.

Currently, there is only one standardized test methodology for testing services during service activation, and this methodology is recommendation Y.1564 from the ITU-T. As is true for all cloud connectivity, strict performance requirements are in place to ensure that applications run efficiently over Carrier Ethernet-based services.

Multiple services can be provided by cloud providers. Because each one of them can have its own class of service, a test methodology capable of measuring multiple key performance indicators (KPIs), such as frame delay, inter-frame delay variation and frame loss, is required on a per-class-of-service basis. For this type of measurement, ITU-T Y.1564, which is referred to as EtherSAM at EXFO, is the only standardized methodology capable of achieving this.

Y.1564 is a two-step methodology that validates the configuration and performance of the different services. During the configuration test, the test instruments will measure CIR/CBS, EIR/EBS, traffic policing and Ethernet-based service attributes, and for a single service at a time. This validates that the bandwidth profile and all major configurable parameters are properly configured on a per-service basis.

Once this step is completed, a second test, i.e., the performance test, will be conducted to test all configured services concurrently. By simulating all services simultaneously at their configured CIR, carriers will ensure that cloud services can be transported across the cloud access network to their maximum committed rate, and that the KPIs meet the service acceptance criteria for each of the services.

In application connectivity, the type of traffic being transmitted requires different service attributes. Because the virtual machine can be migrated from data center to data center, and large amounts of data need to be replicated at another location, the requirements from the services will be different. Fortunately, from a test-methodology perspective, the known methodologies are still applicable. High bandwidth, zero frame loss, and low latency can be measured with Y.1564.

Due to the large amounts of data being transferred, a TCP performance test can be performed to ensure that the files are transferred efficiently across the network. However, because the current service testing trend is based on the ITU-T Y.1564 methodology, it can only provide a reliable assessment of network performance if the applications running on it are UDP-based. If they are running on TCP, the methodology will only provide a general assessment of the network’s performance, and will not be able to measure the end user’s QoS.

For more information about TCP testing methodologies and tools that service providers can use to prove that their network is not at fault, watch the TCP Testing: Measuring True Customer Experience webinar and read the TCP Technology and Testing Methodologies white paper.